About VLAR 2023

The focus of this workshop is to bring together researchers in multimodal reasoning and cognitive models of intelligence, towards positioning the current research progress in AI within the overarching goal of achieving machine intelligence. An important focus of this workshop is to bring to the forefront problems in perception, language modeling, and cognition that are often overlooked in state-of-the-art research and that are important for making true progress in AI. One specific problem that motivated our workshop is the question of how well current deep models learn broad yet simple skills and how well do they generalize their learned models to solve problems that are not part of their learning set; such skills even children learn and use effortlessly. We attempt to look into this aspect of intelligence in the CVPR 2023 paper titled: Are Deep Neural Networks SMARTer than Second Graders? In this workshop, we plan to bring together outstanding faculty/researchers working at the intersections of vision, language, and cognition to provide their opinions on the recent breakthroughs, as well as showcase their cutting edge research on the above topics that could inspire the audience to search for the missing pieces in our quest for artificial intelligence.

Where

Paris Convention Centre (Room S2) and YouTube Live Stream

When

08:45 AM - 05:45 PM on October 3, 2023

Keynote Speakers

Anima Anandkumar

NVIDIA & Caltech

François Chollet

Jitendra Malik

Meta & UC Berkeley

Elizabeth Spelke

Harvard University

Jiajun Wu

Stanford University

Oral Paper Presentation Oliveira Souza et al.

SelfGraphVQA: A Self-Supervised Graph Neural Network for Scene-based Question Answering

[video]

Spotlight Paper Presentation L. Zhang et al.

What If the TV Was Off? Examining Counterfactual Reasoning Abilities of Multi-modal Language Models

[video]

Spotlight Paper Presentation S. Jahagirdar et al.

Understanding Video Scenes through Text: Insights from Text-based Video Question Answering

[video]

Spotlight Paper Presentation M. Ahmad et al.

MMTF: Multi-Modal Temporal Fusion for Commonsense Video Question Answering

[video]

Spotlight Paper Presentation C. Chen et al.

VQA Therapy: Exploring Answer Differences by Visually Grounding Answers

[video]

Spotlight Q&A

Coffee Break

Oral Paper Presentation R. Oshima et al.

Pointing out Human Answer Mistakes in a Goal-Oriented Visual Dialogue

Oral Paper Presentation M. Li et al.

Iterative Robust Visual Grounding with Masked Reference based Centerpoint Supervision

Lunch Break

Oral Paper Presentation F. Sammani et al.

Uni-NLX: Unifying Textual Explanations for Vision and Vision-Language Tasks

[video]

Spotlight Paper Presentation F. Taioli et al.

Language-enhanced RNR-Map: Querying Renderable Neural Radiance Field Maps with Natural Language

[video]

Spotlight Paper Presentation M. Ali et al.

CLIP-Decoder: ZeroShot Multilabel Classification using Multimodal CLIP Aligned Representations

[video]

Spotlight Q&A

Keynote Elizabeth Spelke

Three Foundations for Children’s Learning: Perception, Language, and Core Knowledge

[slides]

Poster Session & Coffee Break

Keynote Anima Anandkumar & Christopher Choy

An Open-Ended Embodied Agent with Large Language Models

[video]

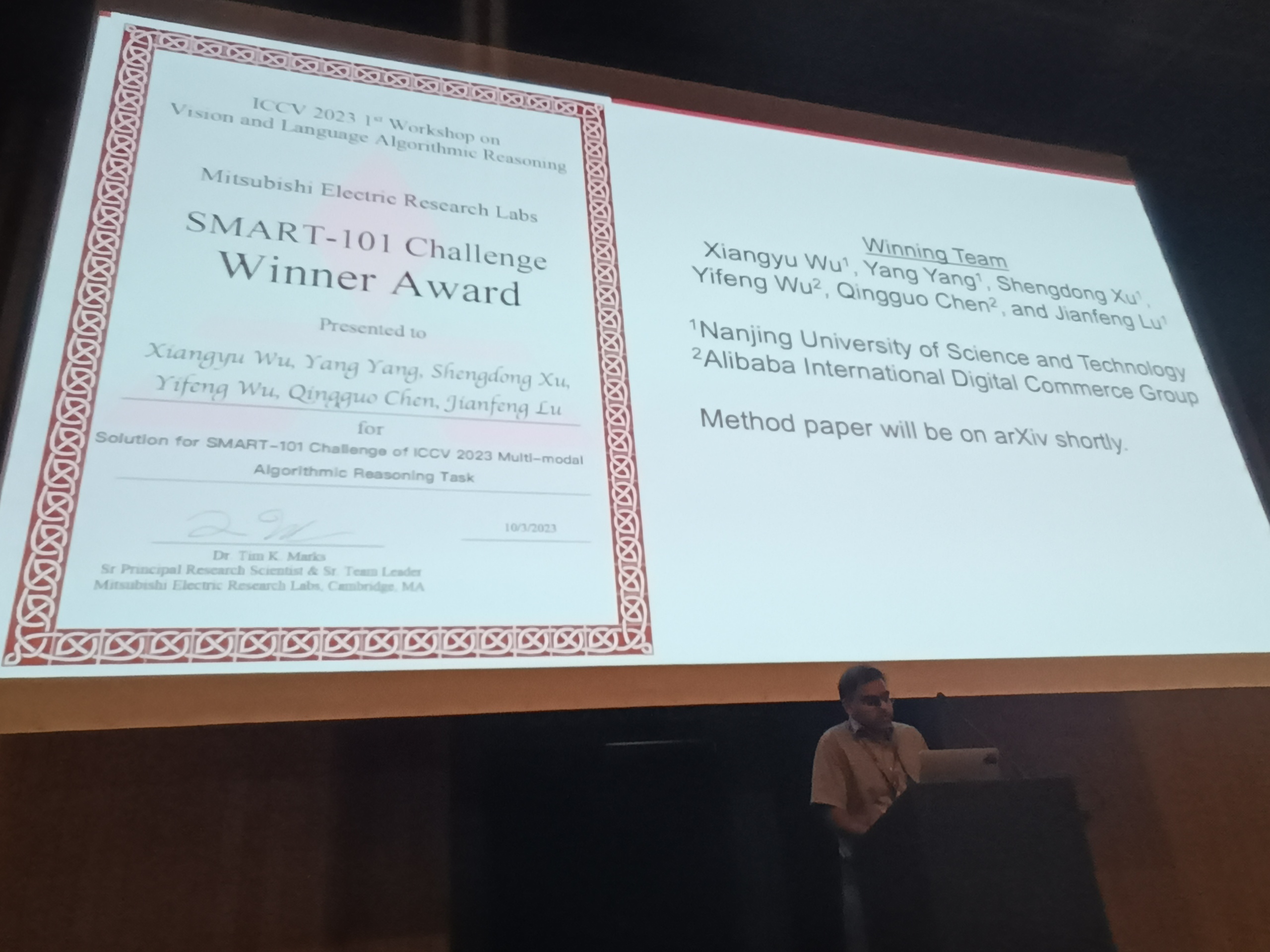

Award Ceremony

[video]

Closing Remarks Anoop Cherian

Paper Track: Submission Instructions

We welcome paper submissions up to 4 pages (excluding references or supplementary materials). Please submit at the

VLAR 2023 @ ICCV 2023 CMT website.

The paper submissions must be in pdf format and use the official ICCV 2023 templates. All submissions must be anonymous and conform to ICCV standards for double-blind review. The deadline for submitting the (optional) supplementary material is the same as the main paper deadline. The (optional) supplementary material should be submitted as a separate file from the main paper. The accepted papers will be included in the ICCV 2023 proceedings. The accepted papers will be presented as either an oral, spotlight, or poster presentation. At least one author of each accepted submission must present the paper at the workshop. The presentation of the accepted papers at VLAR 2023 will follow the same policy as that for the accepted papers of ICCV 2023.

Submission deadline (both main paper & (optional) supplementary material): July 20 24, 2023 (11:59PM EDT).

Notification to authors: August 7, 2023.

Camera ready deadline: August 21, 2023 (AoE time).

We invite the submission of original and high-quality research papers in the topics related to vision-and-language algorithmic reasoning. Accepted work will be presented as either an oral, spotlight, or poster presentation.

Paper Track: Topics

The topics for VLAR 2023 include, but are not limited to:

- Large language models, vision, and cognition including children’s cognition.

- Foundation models of intelligence, including vision, language, and other modalities.

- Artificial general intelligence / general-purpose problem solving architectures.

- Neural architectures for solving vision & language or language-based IQ puzzles.

- Embodiment and AI.

- Large language models, neuroscience, and vision.

- Functional and algorithmic / procedural learning in vision.

- Abstract visual-language reasoning, e.g., using sketches, diagrams, etc.

- Perceptual reasoning and decision making.

- Multimodal cognition and learning.

- New vision-and-language abstract reasoning tasks and datasets.

- Vision-and-language applications.

SMART-101 Challenge Track: Participation Instructions

As part of VLAR 2023, we are hosting a challenge based on the Simple Multimodal Algorithmic Reasoning Task – SMART-101 – dataset. The accompanying CVPR 2023 paper is: “Are Deep Neural Networks SMARTer than Second Graders?” The challenge will be hosted on Eval AI. The challenge participants are required to make arXiv submissions detailing their approach. These are only used to judge the competition, and will not be reviewed and will not be part of workshop proceedings. The winners of the challenge are determined both by performance on the leaderboard over a private test set as well as the novelty of the proposed method (as detailed in the arXiv submission). The details will be made available on the challenge website. The prizes will be awarded to the winners on the day of the workshop. The key dates of the challenge are as follows:

Challenge open: June 15, 2023.

Challenge details: SMART-101 Challenge page on Eval AI.

Submission deadline and arXiv paper deadline to be considered for awards: September 1 15, 2023 (11:59PM EDT).

Public winner announcement: October 3, 2023 (11:59PM EDT).

Oral Papers

- Uni-NLX: Unifying Textual Explanations for Vision and Vision-Language Tasks.

Fawaz Sammani, Nikos Deligiannis. - SelfGraphVQA: A Self-Supervised Graph Neural Network for Scene-based Question Answering.

Bruno Cesar de Oliveira Souza, Marius Aasan, Helio Pedrini, Adín Ramírez Rivera. - Iterative Robust Visual Grounding with Masked Reference based Centerpoint Supervision.

Menghao Li, Chunlei Wang, Wenquan Feng, Shuchang Lyu, Guangliang Cheng, Xiangtai Li, Binghao Liu, Qi Zhao. - Pointing out Human Answer Mistakes in a Goal-Oriented Visual Dialogue.

Ryosuke Oshima, Seitaro Shinagawa, Hideki Tsunashima, Qi Feng, Shigeo Morishima.

Spotlight Papers

- What If the TV Was Off? Examining Counterfactual Reasoning Abilities of Multi-modal Language Models.

Letian Zhang, Xiaotong Zhai, Zhongkai Zhao, Xin Wen, Bingchen Zhao. - Understanding Video Scenes through Text: Insights from Text-based Video Question Answering.

Soumya Shamarao Jahagirdar, Minesh Mathew, Dimosthenis Karatzas, C.V. Jawahar. - MMTF: Multi-Modal Temporal Fusion for Commonsense Video Question Answering.

Mobeen Ahmad, Geonwoo Park, Sang Uk Park. - VQA Therapy: Exploring Answer Differences by Visually Grounding Answers.

Chongyan Chen, Samreen Anjum, Danna Gurari. - Language-enhanced RNR-Map: Querying Renderable Neural Radiance Field Maps with Natural Language.

Francesco Taioli, Federico Cunico, Federico Girella, Riccardo Bologna, Alessandro Farinelli, Marco Cristani. - CLIP-Decoder: ZeroShot Multilabel Classification using Multimodal CLIP Aligned Representations.

Muhammad Ali, Salman Khan.

Awards

Honorable Mention

- Uni-NLX: Unifying Textual Explanations for Vision and Vision-Language Tasks.

Fawaz Sammani, Nikos Deligiannis. - Iterative Robust Visual Grounding with Masked Reference based Centerpoint Supervision.

Menghao Li, Chunlei Wang, Wenquan Feng, Shuchang Lyu, Guangliang Cheng, Xiangtai Li, Binghao Liu, Qi Zhao.

Best Paper Award

- SelfGraphVQA: A Self-Supervised Graph Neural Network for Scene-based Question Answering.

Bruno Cesar de Oliveira Souza, Marius Aasan, Helio Pedrini, Adín Ramírez Rivera.

Challenge Award

- Solution for SMART-101 Challenge of ICCV Multi-modal Algorithmic Reasoning Task 2023.

Xiangyu Wu, Yang Yang, Shengdong Xu, Yifeng Wu, Qingguo Chen, Jianfeng Lu.

Award Ceremony Photos

VLAR 2023 Venue

Paris Convention Centre

VLAR 2023 will be held at the Room S2 of the Paris Convention Centre at 08:45 AM - 05:45 PM CET on October 3, 2023. (YouTube Live Stream Link)

Organizers

Anoop Cherian

Mitsubishi Electric Research Laboratories (MERL)

Kuan-Chuan Peng

Mitsubishi Electric Research Laboratories (MERL)

Suhas Lohit

Mitsubishi Electric Research Laboratories (MERL)

Ram Ramrakhya

Georgia Tech

Honglu Zhou

NEC Laboratories America, Inc.

Kevin Smith

MIT

Tim Marks

Mitsubishi Electric Research Laboratories (MERL)

Joanna Matthiesen

Math Kangaroo USA, Association Kangourou sans Frontières, Notre Dame University

Program Committee

| Alessandro Salatiello | University of Tübingen |

| Ankan Bansal | Amazon |

| Can Qin | Northeastern University |

| Deep A. Patel | NEC Laboratories America, Inc. |

| Kai Li | NEC Laboratories America, Inc. |

| Man Luo | Mayo Clinic |

| Michael J. Jones | Mitsubishi Electric Research Laboratories |

| Paola Cascante-Bonilla | Rice University |

| Sai Saketh Rambhatla | Meta AI Research |

| Shailaja K. Sampat | Arizona State University |

| Suraj Srinivas | Harvard University |

| Tejas Gokhale | University of Maryland, Baltimore County |

| Trilok Padhi | Georgia State University |

| Xiangyu Peng | Georgia Institute of Technology |

| Xudong Lin | Columbia University |

| Yixin Liu | Lehigh University |